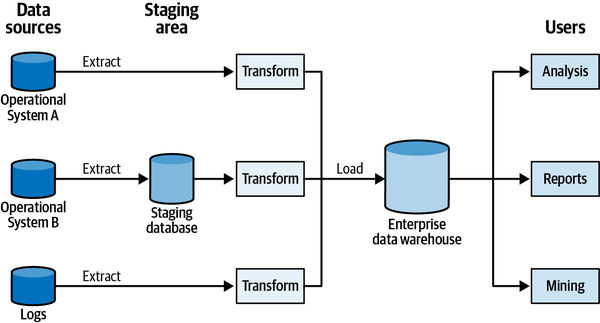

Relatively straightforward: extract data from all the enterprise’s operational systems, transform into an analytical model, load into a data analysis-oriented database. The resultant database is the data warehouse.

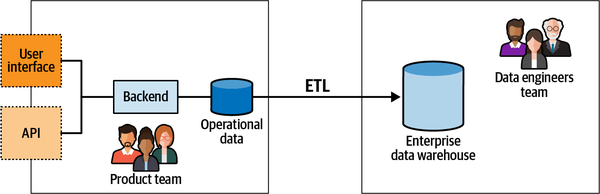

Based primarily on the ETL scripts.

Data can come from various sources:

- operational databases

- streaming events

- logs etc.

When translating from operational systems data to analytical data may include additional steps to remove sensitive data, deduplicate records, reorder, aggregate etc. In some cases transformation may require temporary storage for the incoming data - staging area.

Challenges

- Because it tries to build enterprise-wide model, each single model should support different use cases (build reports, train ML models etc). This approach is only practical for smallest organizations. Can be addressed with data marts, but if data mart is populated from data warehouse itself, the model still needs to be defined in the warehouse.

- ETL processes create a a strong coupling between analytical and operational systems. Because the data consumed from operational systems is read directly from its databases, implementation details leak into the ETL scripts. And a slight change in the schema will probably break it. When different teams work on operational system and data warehouse, communication challenges occur and leads to lots of friction.